This is the blog section. It has two categories: News and Releases.

Files in these directories will be listed in reverse chronological order.

This is the multi-page printable view of this section. Click here to print.

This is the blog section. It has two categories: News and Releases.

Files in these directories will be listed in reverse chronological order.

We are happy to announce the latest release of Shipwright’s main projects - v0.15.z.

You may have noticed the usual “.0” in the version has been replaced with a “.z” - more on this in

a minute!

Below are the key features in this release:

Builds v0.15 adds additional support for controlling which nodes a build can run on. In addition to specifying a node selector (introduced in v0.14), builds can now tolerate node taints and instruct Kubernetes to use a custom pod scheduler. The latter feature can be used with new projects like Volcano, which optimizes pod scheduling for batch workloads.

The CLI was updated to support Build v0.15.0 APIs.

The operator was updated to deploy Builds v0.15.2.

Install Tekton v0.68.0:

kubectl apply --filename https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.68.0/release.yaml

Install v0.15.2 using the release YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.15.2/release.yaml --server-side

curl --silent --location https://raw.githubusercontent.com/shipwright-io/build/v0.15.2/hack/setup-webhook-cert.sh | bash

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.15.2/sample-strategies.yaml --server-side

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.15.0/shp_0.15.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp version

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.15.0/shp_0.15.0_macOS_$(uname -m).tar.gz | tar -xzf - -C /usr/local/bin shp

shp version

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.15.0/shp_0.15.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp version

shp help

To deploy and manage Shipwright Builds in your cluster, first ensure the operator v0.15.2 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

Since v0.14.0 was released, we have done a lot of work behind the scenes to automate Shipwright’s

release process and security posture. Part of this includes a set of

nightly GitHub Actions

that scan our container images for vulnerabilities at the code and operating system level.

This process covers our most recent release as well as the nightly builds that come out of the

main branch.

Less than a day after Builds v0.15.0 was released, vulnerabilties in the golang.org/x/crypto

and golang.org/x/oauth2 packages were disclosed. These were picked up by our nightly automation,

which filed a GitHub issue notifying the

community of the problem. The maintainers quickly sprung into action, submitting pull requests to

patch the vulnerable code. The next night our automation detected these vulnerabilities were fixed,

and drafted a security patch release.

Two days later, we patched the Build project all over again.

All this happened as the cli and operator projects were preparing releases of their own.

Special thank you to @SaschaSchwarze0 for not only fixing these vulnerabilties, but also building much of the workflows that automate these security updates. Bravo!

Update 2025-01-07: added Operator installation instructions

We are happy to announce the v0.14.0 release of Shipwright. This is our first release since we have joined the Cloud Native Computing Foundation (CNCF) as a sandbox project.

In this release, we have put together some nice features:

Keeping your environments secure is key these days. For container images, scanning them is widely adopted. Shipwright now performs a shift left of those scans by incorporating image scanning into the image build itself. We’ll ensure that a vulnerable image never makes it into your container registry (though, you’d still have to re-scan it regularly to determine when it becomes vulnerable). This is a great safeguard for example against base images you consume in your Dockerfile that suddenly are not updated anymore.

You can read more about it in the separate blog post Building Secure Container images with Shipwright.

The Shipwright CLI finally received the first support for build parameters. You can use the --param-value argument to provide values for strategy parameters such as the Go version and Go flags in our ko sample build strategy like this: shp build create my-app --param-value go-version=1.23 --param-value go-flags=-mod=vendor.

Often the small changes are what help you, here are some:

StepOutOfMemory as reason.Install Tekton v0.65.1:

kubectl apply --filename https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.65.1/release.yaml

Install v0.14.0 using the release YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.14.0/release.yaml --server-side

curl --silent --location https://raw.githubusercontent.com/shipwright-io/build/v0.14.0/hack/setup-webhook-cert.sh | bash

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.14.0/sample-strategies.yaml --server-side

If you are a long-standing Shipwright user that started to use us on our Alpha API (before v0.13.0), then we recommend you to run a storage version migration. It will update the stored version of all Shipwright resources in your cluster to the Beta API to unnecessary invocations of our conversion webhook in the future.

curl --silent --location https://raw.githubusercontent.com/shipwright-io/build/v0.14.0/hack/storage-version-migration.sh | bash

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.14.0/cli_0.14.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp version

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.14.0/cli_0.14.0_macOS_$(uname -m).tar.gz | tar -xzf - -C /usr/local/bin shp

shp version

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.14.0/cli_0.14.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp version

shp help

To deploy and manage Shipwright Builds in your cluster, first ensure the operator v0.14.0 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

In the modern software development era, containers have become an essential tool for developers. They offer a consistent environment for applications to run, making it easier to develop, test, and deploy software across different platforms. However, like any other technology, containers are not immune to security vulnerabilities. This is where vulnerability scanning for container images becomes crucial. In this blog, we will discuss how to run vulnerability scanning on container images with Shipwright while building those images.

Before jumping into this feature, let’s explain what Shipwright is and why vulnerability scanning is important.

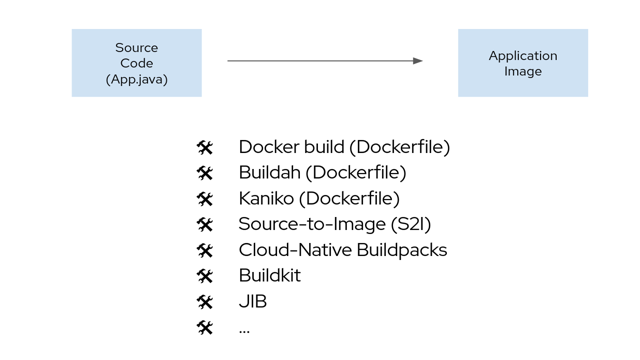

Shipwright is an open-source framework designed to facilitate the building of container images directly within Kubernetes environments. It aims to streamline the development and deployment process by providing a native Kubernetes solution for creating container images from source code. Shipwright supports multiple build strategies and tools, such as Kaniko, Paketo Buildpacks, Ko, Buildkit and Buildah, providing flexibility and extensibility to meet various application needs. This Kubernetes-native solution helps ensure that container images are built efficiently and securely, leveraging the strengths of the Kubernetes ecosystem.

Shipwright consists of four core components:

You can learn more by visiting this link.

Vulnerability scanning for container images involves examining the image for known security vulnerabilities. This is typically done using automated tools that compare the contents of the image against a database of known vulnerabilities. The key reasons for Vulnerability Scanning are:

There are many popular tools available for vulnerability scanning of container images, such as Clair, Trivy, Aqua Security, and Snyk.

In Shipwright, we use Trivy under the covers for vulnerability scanning, our rational for choosing this tool can be found in our SHIP-0033.

Before we dive in how it works, lets explore the features offered by Shipwright for vulnerability scanning of container builds :

spec:

output:

vulnerabilityScan:

enabled: true

failOnFinding: true #image won't be push to registry if set to true

ignore:

issues:

- CVE-2022-12345 #specify list of cve to be ignored

severity: Low | Medium | High | Critical

unfixed: true #ignores the unfixed vulnerabilities

Configuration Options

vulnerabilityScan.enabled: Specify whether to run vulnerability scan for image. The supported values are true and false.vulnerabilityScan.failOnFinding: Indicates whether to fail the build run if the vulnerability scan results in vulnerabilities. The supported values are true and false. This field is optional and false by default.vulnerabilityScan.ignore.issues: References the security issues to be ignored in vulnerability scanvulnerabilityScan.ignore.severity: Denotes the severity levels of security issues to be ignored, valid values are:vulnerabilityScan.ignore.unfixed: Indicates to ignore vulnerabilities for which no fix exists. The supported types are true and false.Now, let’s see vulnerability scanning for a container image with Shipwright in action. If you want to try out in kind cluster, follow the steps from this section until you create the push secret. As a next step, create a build object with vulnerability scanning enabled, replacing <REGISTRY_ORG> with the registry username your push-secret have access to:

REGISTRY_ORG=<your_registry_org>

cat <<EOF | kubectl apply -f -

apiVersion: shipwright.io/v1beta1

kind: Build

metadata:

name: buildah-golang-build

spec:

source:

type: Git

git:

url: https://github.com/shipwright-io/sample-go

contextDir: docker-build

strategy:

name: buildah-shipwright-managed-push

kind: ClusterBuildStrategy

paramValues:

- name: dockerfile

value: Dockerfile

output:

image: docker.io/${REGISTRY_ORG}/sample-golang:latest

pushSecret: push-secret

vulnerabilityScan:

enabled: true

failOnFinding: true # if set to true, then the image won't be pushed to the registry

EOF

To view the Build which you just created:

$ kubectl get builds

NAME REGISTERED REASON BUILDSTRATEGYKIND BUILDSTRATEGYNAME CREATIONTIME

buildah-golang-build True Succeeded ClusterBuildStrategy buildah-shipwright-managed-push 72s

Now submit your buildrun

cat <<EOF | kubectl create -f -

apiVersion: shipwright.io/v1beta1

kind: BuildRun

metadata:

generateName: buildah-golang-buildrun-

spec:

build:

name: buildah-golang-build

EOF

Wait until your BuildRun is completed and then you can view it as follows:

$ kubectl get buildruns

NAME SUCCEEDED REASON STARTTIME COMPLETIONTIME

buildah-golang-buildrun-s9gsh False VulnerabilitiesFound 2m54s 98s

Here, you can see that the buildrun failed with reason VulnerabilitiesFound and it will not push the image to the registry as the failOnFinding option is set to true.

And one can find the list of vulnerabilities in the build run under the .status.output path:

apiVersion: shipwright.io/v1beta1

kind: BuildRun

metadata:

creationTimestamp: "2024-07-08T08:03:18Z"

generateName: buildah-golang-buildrun-

generation: 1

labels:

build.shipwright.io/generation: "1"

build.shipwright.io/name: buildah-golang-build

name: buildah-golang-buildrun-s9gsh

namespace: default

resourceVersion: "19926"

uid: f3c558e9-e027-4f59-9fdc-438a31c6de11

spec:

build:

name: buildah-golang-build

status:

buildSpec:

output:

image: docker.io/karanjmu92/sample-golang:latest

pushSecret: push-secret

vulnerabilityScan:

enabled: true

failOnFinding: true

paramValues:

- name: dockerfile

value: Dockerfile

source:

contextDir: docker-build

git:

url: https://github.com/shipwright-io/sample-go

type: Git

strategy:

kind: ClusterBuildStrategy

name: buildah-shipwright-managed-push

completionTime: "2024-07-08T08:04:34Z"

conditions:

- lastTransitionTime: "2024-07-08T08:04:34Z"

message: Vulnerabilities have been found in the image which can be seen in the

buildrun status. For detailed information,see kubectl --namespace default logs

buildah-golang-buildrun-s9gsh-w488m-pod --container=step-image-processing

reason: VulnerabilitiesFound

status: "False"

type: Succeeded

failureDetails:

location:

container: step-image-processing

pod: buildah-golang-buildrun-s9gsh-w488m-pod

output:

vulnerabilities:

- id: CVE-2023-24538

severity: critical

- id: CVE-2023-24540

severity: critical

.

.

.

- id: CVE-2024-24791

severity: medium

source:

git:

branchName: main

commitAuthor: OpenShift Merge Robot

commitSha: 96afb4108fba22e91f42168d8babb5562ac8e5bb

timestamp: "2023-08-10T15:24:45Z"

startTime: "2024-07-08T08:03:18Z"

taskRunName: buildah-golang-buildrun-s9gsh-w488m

Shipwright offers a robust and flexible solution for building container images within Kubernetes environments. By integrating vulnerability scanning directly into the build process, Shipwright ensures that container images are secure and compliant with Industry Standards and gets closer to Supply Chain Security Best Practices.

Update 2024-07-09: added Operator installation instructions

After months of diligent work, just in time for cdCon 2024, we are releasing our v0.13.0 release. This significant milestone incorporates a bunch of enhancements, features and bug fixes. Here are the key highlights:

After upgrading from v0.12.0 to v0.13.0, you can run the following two commands to remove unnecessary permissions on the shipwright-build-webhook:

kubectl delete crb shipwright-build-webhook && kubectl delete cr shipwright-build-webhook

We switched our CRDs storage version to

v1beta1. We strongly advise users to migrate to ourv1beta1API, as we intend to deprecatev1alpha1in a future release. Note that thev1alpha1still served for all CRD’s.

SHIP 0037 is now implemented. Users can make use of the Build .spec.output.timestamp to explicitly set the resulting container image timestamp.

Our Shipwright controllers now use the Tekton v1 API when working with TaskRuns. See PR.

The storage version for all CRD’s has been switched to v1beta1. Concurrently, our Shipwright controllers have been updated to use this updated API version. The v1alpha1 version continues to be supported.

We’ve implemented minor adjustments to the existing v1beta1 API for the sake of consistency, incorporating feedback gathered from the v0.12.0 release. These are:

build.Spec.Source.GitSource to build.Spec.Source.Git, aligning them with their respective JSON tags.build.Spec.Source.Type and buildRun.Spec.Source.Type as mandatory, addressing usability concerns raised by users.build.Spec.Source optional. Enabling the definition of a Build without any source. This feature proves valuable

particularly when executing a Build only with local source.build.spec.source.git.url upon the specification of build.spec.source.git.Further details can be found in PR 1504 and PR 1441.

As the usage of the webhook increased over the last months, we’ve made enhancements to address certain gaps:

When implementing prevention measures against path traversal during the extraction of an OCI artifact, we were too strict. We only needed to prevent /../ because this means to go one directory up. We still must allow .. because a directory or file can contain two subsequent dots in its name. You can now use files and directories with two subsequent dots in its name when using an OCI artifact as source.

When a Build with an unknown strategy kind is defined, the Build validation triggers. However, it was failing to update the Build status to Failed, resulting in an endless loop during reconciliation. This issue has been resolved.

Dependabot updates play a crucial role in keeping our go dependencies current with CVE’s. Building upon this, we’ve recently implemented a similar automation to streamline the process of updating our CI Github actions, see PR 1516.

Furthermore, we have been consistently updating all of our Build tools, such as ko and buildpacks, using our custom automation, ensuring that our Strategies remain on the latest versions.

It’s worth noting that our minimum supported Kubernetes version is now 1.27, while the minimum Tekton version is 0.50.*.

Additionally, Shipwright Build is now compiled with Go 1.21

Our general Roadmap and Adopters doc has been updated.

Install Tekton v0.50.5:

kubectl apply --filename https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.50.5/release.yaml

Install v0.13.0 using the release YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.13.0/release.yaml --server-side

curl --silent --location https://raw.githubusercontent.com/shipwright-io/build/v0.13.0/hack/setup-webhook-cert.sh | bash

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.13.0/sample-strategies.yaml --server-side

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.13.0/cli_0.13.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp version

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.13.0/cli_0.13.0_macOS_$(uname -m).tar.gz | tar -xzf - -C /usr/local/bin shp

shp version

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.13.0/cli_0.13.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp version

shp help

To deploy and manage Shipwright Builds in your cluster, first ensure the operator v0.13.0 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

About a year ago, we published a blog post in which we outline our vision and our values. Part of this vision was to advance our API to enhance its simplicity and consistency, and to signal a higher level of maturity.

Today, as part of our release v0.12.0, we are introducing our beta API. The beta API brings multiple changes as a result of accumulated experience operating the alpha API and incorporating valuable user feedback.

With the introduction of the beta API, users can have confidence that our core components have been battle-tested, and using our different features is considered a safe practice.

We want to thank our community for their contributions and support in redefining this new API!

The beta API is available starting from the v0.12.0 release. The release is available across our cli, operator and build repository.

Within the v0.12.0 release, a conversion webhook has been introduced to ensure backward compatibility between the v1alpha1 and v1beta1.

We strongly encourage both current and future users to adopt the beta API to benefit from its enhanced definition.

| Resource | Field | Alternative |

|---|---|---|

| Build | .spec.sources | .spec.source |

| Build | .spec.dockerfile | spec.paramValues[] with dockerfile |

| Build | .spec.builder | none |

| Build | .spec.volumes[].description | none |

| BuildRun | .spec.serviceAccount.generate | .spec.serviceAccount with .generate |

| BuildRun | .spec.sources | .spec.source |

| BuildRun | .spec.volumes[].description | none |

| Old field | New field |

|---|---|

.spec.source.url | .spec.source.git.url |

.spec.source.bundleContainer | .spec.source.ociArtifact |

.spec.sources for LocalCopy | .spec.source.local |

.spec.source.credentials | .spec.source.git.cloneSecret or .spec.source.ociArtifact.pullSecret |

.spec.output.credentials | .spec.output.pushSecret |

See this example of the Git source type:

# v1alpha1

---

apiVersion: shipwright.io/v1alpha1

kind: Build

metadata:

name: a-build

spec:

source:

url: https://github.com/shipwright-io/sample-go

contextDir: docker-build

strategy:

name: buildkit

kind: ClusterBuildStrategy

output:

image: an-image

# v1beta1

---

apiVersion: shipwright.io/v1beta1

kind: Build

metadata:

name: a-build

spec:

source:

type: Git

git:

url: https://github.com/shipwright-io/sample-go

contextDir: docker-build

strategy:

name: buildkit

kind: ClusterBuildStrategy

output:

image: an-image

| Old field | New field |

|---|---|

.spec.buildSpec | .spec.build.spec |

.spec.buildRef | .spec.build.name |

.spec.sources | .spec.source only for Local |

.spec.serviceAccount.generate | .spec.serviceAccount with .generate |

Note: generated service accounts is a deprecated feature, and may be removed in a future release.

See example:

# v1alpha1

---

apiVersion: shipwright.io/v1alpha1

kind: BuildRun

metadata:

name: a-buildrun

spec:

buildRef:

name: a-build

serviceAccount:

generate: true

# v1beta1

---

apiVersion: shipwright.io/v1beta1

kind: BuildRun

metadata:

name: a-buildrun

spec:

build:

name: a-build

serviceAccount: ".generate"

| Old field | New field |

|---|---|

.spec.buildSteps | .spec.steps |

For more information, see SHIP 0035.

Shipwright is back with the v0.12.0 release, moving our API from alpha to beta.

Some key points to consider:

Please take a look at the following blog post to see some of our guidelines on moving your Shipwright Custom Resources from alpha to beta.

Install Tekton v0.47.4:

kubectl apply --filename https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.47.4/release.yaml

Install v0.12.0 using the release YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.12.0/release.yaml --server-side

curl --silent --location https://raw.githubusercontent.com/shipwright-io/build/v0.12.0/hack/setup-webhook-cert.sh | bash

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply --filename https://github.com/shipwright-io/build/releases/download/v0.12.0/sample-strategies.yaml --server-side

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.12.0/cli_0.12.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp version

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.12.0/cli_0.12.0_macOS_$(uname -m).tar.gz | tar -xzf - -C /usr/local/bin shp

shp version

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.12.0/cli_0.12.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp version

shp help

To deploy and manage Shipwright Builds in your cluster, first ensure the operator v0.12.0 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

Shipwright is proud to participate in this year’s Hacktoberfest coding festival. This is our second year accepting Hacktoberfest contributions. We would love to see you join our community!

#shipwright channel on Kubernetes Slack.We have created a GitHub project listing all issues that are good candidates for your Hacktoberfest pull request.

You are not limited to these items - you can choose any issue to work on, or submit a change that you feel is meaningful.

If your issue does not have the hacktoberfest label, please add the label to your pull request so it can be recognized.

Recently, the Shipwright community came together to define a beta API for the Build project with stronger support guarantees. We have come a long way since our launch two years ago, as “a framework for building container images on Kubernetes.” During the workshop, the community found itself coming back to a fundamental question, “What is Shipwright?” And more importantly, “What do we want Shipwright to be?”

We concluded that Shipwright is and should remain a framework for building container images. Shipwright will continue to make it simple to build a container image from source, using tools that are actively maintained by a community of experts. Our separation of build strategy from build definition and execution will remain a cornerstone of the Shipwright framework.

However, we realized that building the image is just the starting point to delivering software on the cloud. Software supply chain security is a topmost concern of teams large and small. Artifacts like image scans, signatures, software bill of materials, and provenance are needed to build modern software for the cloud. Shipwright can, and should, rise up to meet these demands.

We also decided that Shipwright will continue to run on cloud-native infrastructure, powered by Kubernetes and Tekton. We can go further, though, and plug Shipwright into the vast cloud-native ecosystem, through integrations with CDEvents, ArgoCD, and more. Shipwright is just getting started in this effort through the Triggers and Image sub-projects.

Over the past two years, the Shipwright community has coalesced around three core values: simplicity, flexibility, and security.

Simplicity means that we provide an experience that is intuitive and consistent. It also means that we shouldn’t be afraid to take an opinionated stance on common tasks, or features that we want to add to the project. We discovered in the Beta API workshop areas where our APIs were not consistent or intuitive, and we identified changes to fix these problem areas. These include single sources for builds and maintaining our opinionated steps to obtain source code.

Flexibility means that we provide space for teams to bend Shipwright to fit their needs. This started with the build strategy model itself, which we are keeping at the core of the API. We continued with the Parameters API, which provides avenues for customization between build strategies and build executions. We also took steps in our beta workshop to ensure our API is tool agnostic, such that we can help grow the ecosystem of build tools. This meant that some fields that were only used by specific build tools were dropped.

Lastly, Shipwright aims to meet the security needs for cloud-native applications. Security for Shipwright starts with the transparent pod security contexts built into the build strategy API. This encourages the continued evolution of tooling away from privileged and “root” containers, both of which are potential security risks. As a community, we have started experimenting with tools like Trivy to make the security of Shipwright-built images more transparent. We hope to continue these efforts with emerging software security tools in the future.

Starting in version 0.12, Shipwright will introduce the beta Build API

and begin phasing out the current alpha API.

We encourage current and future users to provide feedback as we roll this new

API out.

You can provide feedback by filing an issue on GitHub,

sending an email to our mailing list,

or posting a message to the #shipwright channel on Kubernetes Slack.

We look forward to hearing from you!

Shipwright is proud to participate in this year’s Hacktoberfest coding festival. This is our first year accepting Hacktoberfest contributions. We would love to see you join our community!

#shipwright channel on Kubernetes Slack.We have created a GitHub project listing all issues that are good candidates for your Hacktoberfest pull request.

You are not limited to these items - you can choose any issue to work on, or submit a change that you feel is meaningful.

If your issue does not have the hacktoberfest label, please add the label to your pull request so it can be recognized.

Shipwright is back with the v0.11.0 release. It is mostly a maintenance release without new features:

Behind the scenes we are working on streamlining our API objects. As a first step, we deprecate the following fields:

spec.sources is deprecated. We will consolidate supporting a single source for a Build under spec.source. Also, HTTP sources are deprecated because we think that the focus that secure software supply chain brings, does not match with artifacts that are loaded from an HTTP endpoint.spec.dockerfile and spec.builder are deprecated. Those fields were introduced at the very beginning of this project to support relevant build strategies. But, those fields only relate to specific build strategies. Since a couple of releases, we support strategy parameters for that purpose where we will move to.spec.serviceAccount.generate is deprecated. We think that the adhoc generation of service accounts does not fit very well in Kubernetes’ security principals and will therefore move away from that concept.Install Tekton v0.38.3:

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.38.3/release.yaml

Install v0.11.0 using the release YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.11.0/release.yaml

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.11.0/sample-strategies.yaml

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.11.0/cli_0.11.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp version

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.11.0/cli_0.11.0_macOS_$(uname -m).tar.gz | tar -xzf - -C /usr/local/bin shp

shp version

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.11.0/cli_0.11.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp version

shp help

To deploy and manage Shipwright Builds in your cluster, first make sure the operator v0.11.0 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

Just before cdCon 2022, we are releasing our v0.10 release. But before we look into it, we would like to invite you to join our cdCon sessions. We’ll have two interesting presentations and a summit where you can bring every feedback and suggestion that you would like to share with us, and bring all your questions that you want to get answered. See our blog post for more information. See you in Austin or in the virtual space.

Let’s get back to v0.10. It comes with one big and long-wanted feature:

We extended our build strategy resource to contain volumes. Build strategy authors can “finalize” them - or make them overridable by Build users.

The most interesting scenario that this enables is the caching of build artifacts. Here is how the ko build strategy makes use of it:

emptyDir. Those are ephemeral volumes for the runtime of a pod. The default behavior therefore is that no caching happens just like the strategy behaves all the time. But, the build strategy defines the volume as overridable.Especially if you rebuild larger projects, the performance gain is enormous.

We will look at other sample build strategies in the future and will evolve them with volume support in the next releases.

Note: the feature comes with one breaking change that is relevant for Build Strategy authors. Previously, you were able to define volumeMounts on buildSteps. Shipwright then implicitly added the volumes with an emptyDir. Given we now support volumes in build strategies, we force build strategy authors to define the volume. We did that in other sample build strategies where such implicit volumes were used to share directories between buildSteps. For example, the source-to-image build strategy now has explicit emptyDir volumes to share directories between the source analysis and build step.

Beside that, we invested in maintenance-related items:

Install Tekton v0.35.1:

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.35.1/release.yaml

Install v0.10.0 using the release YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.10.0/release.yaml

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.10.0/sample-strategies.yaml

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.10.0/cli_0.10.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp version

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.10.0/cli_0.10.0_macOS_$(uname -m).tar.gz | tar -xzf - -C /usr/local/bin shp

shp version

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.10.0/cli_0.10.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp version

shp help

To deploy and manage Shipwright Builds in your cluster, first make sure the operator v0.10.0 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

We are pleased to announce that this year we will be hosting our first Shipwright Community Summit at the cdCon 2022 Conference.

The Shipwright Community Summit brings together our community members and future contributors, or any interested party. During the Community Summit we expect to get closer to you and address any questions you might have related to our Technology, Processes or Community.

If you would be interested to participate on this event, please refer to the next sections.

It goes without saying that we are very excited to meet you.

The Shipwright Community will be available both on-site and virtually on Thursday June 9, 2022 2:00pm to 5:00pm CDT, you can find the schedule in here.

On-site: Find us at room 209 in the Conference Venue.

Virtually: We will be hosting a virtual session in Zoom. Join us on https://zoom.us/j/97487770299.

| Topic | Description |

|---|---|

| Ask us Anything | We prepared in advance a GitHub discussion, so you could drop there any questions you might want to discuss. |

| Contributors Introduction | We would like to tell you a little more on who we are, how to reach out and how to engage with the community. |

| Demo Festival | Let’s show some of the Shipwright capabilities, and how you can leverage them! |

| Roadmap | Discussion on the upcoming big items, both from a technical and community point of view. |

| Open Forum | The rest of the time would be an office hours approach, where we can discuss anything. |

Here are our two talks taking place during the conference.

DevSecOps with Shipwright and Tekton

Shipwright - What happened in our First Years as a CDF Project

We are proud to have an Easter present for you: the new Shipwright v0.9.0 release with some really cool features.

Interested in what we have for you. Here are three larger items:

In Shipwright, in order to create a container image from source or a Dockerfile, you so far needed a Build and a BuildRun. Very simplified, it is the Build that contains all pieces of information required to know what to build and the BuildRun in this picture is the trigger to kick off the actual build process. This setup allows for a good separation of concerns and is ideal for use cases in which one builds a new image from the same source repository over the course of time.

However, frequent feedback we got is that there are also use cases where users just want to have a one-off build run and in these scenarios an additional Build just adds unnecessary complexity.

With this release, we introduced the option to embed a build specification into a BuildRun. That enables standalone build runs with just one resource definition in the cluster. The build specification is exactly the same configuration one would use in a Build resource, so it will immediately look familiar. Instead of buildRef in the BuildRun spec section, use buildSpec to configure everything you need.

apiVersion: shipwright.io/v1alpha1

kind: BuildRun

metadata:

name: standalone-buildrun

spec:

buildSpec:

source:

url: https://github.com/shipwright-io/sample-go.git

contextDir: source-build

strategy:

kind: ClusterBuildStrategy

name: buildpacks-v3

output:

image: foo/bar:latest

Some technical notes:

buildRef and buildSpec at the same time in one BuildRun, as this would be ambiguous. Therefore the respective BuildRun will reflect this with an error message in the status condition.timeout), which can only be used with the buildRef field. These overrides are not required, since you can define all build specific fields in the buildSpec directly.buildRef or buildSpec in different build runs. It is up to your respective use case and liking.Build, it does not have a name and it does not affect the purely build related metrics (i.e. the build counter).TaskRun resources that are created by Shipwright will only have a BuildRun reference in their label for embedded builds, since there is no actual Build in the system. This is important for any label selector that might expect a TaskRun to have the build label.buildSpec field and the Build spec field are technically the exact same definition. This means everything that is supported in builds is also supported as an embedded build specification. The same applies for potential field deprecation, it applies to both.In Shipwright, till now, we did not have a method to automatically delete BuildRuns. This release allows you to do that by adding a few fields to Build and BuildRun specifications. This feature can be used by adding the following fields:

buildrun.spec.retention.ttlAfterFailed: The BuildRun is deleted if the mentioned duration of time has passed after the BuildRun has failed.buildrun.spec.retention.ttlAfterSucceeded: The BuildRun is deleted if the mentioned duration of time has passed after the BuildRun has succeeded.build.spec.retention.ttlAfterFailed: The BuildRun is deleted if the mentioned duration of time has passed after the BuildRun has failed.build.spec.retention.ttlAfterSucceeded: The BuildRun is deleted if the mentioned duration of time has passed after the BuildRun has succeeded.build.spec.retention.succeededLimit - Defines number of succeeded BuildRuns for a Build that can exist.build.spec.retention.failedLimit - Defines number of failed BuildRuns for a Build that can exist.Some technical notes:

build.spec.retention.failedLimit and build.spec.retention.succeededLimit values, they become effective immediately.build.spec.retention.ttlAfterFailed and build.spec.retention.ttlAfterSucceeded values in Builds, they will only affect new BuildRuns. However, updating buildrun.spec.retention.ttlAfterFailed and buildrun.spec.retention.ttlAfterSucceeded in BuildRuns that have already been created will enforce the changes as soon as they are applied.Our command line interface is supporting these new fields when creating Builds, and BuildRuns:

$ shp build create my-build [...] --retention-failed-limit 10 --retention-succeeded-limit 5 --retention-ttl-after-failed 48h --retention-ttl-after-succeeded 3h

$ shp build run my-build --retention-ttl-after-failed 24h --retention-ttl-after-succeeded 1h

In our v0.8.0 release, we enabled local source for builds using the streaming approach. A BuildRun is then waiting to get sources which the CLI streams using kubectl exec capabilities. This is an amazing feature that enables you to build container images without being required to commit and push your sources into a Git repository.

With v0.9.0, we support an alternative approach to transport sources into the BuildRun: using a container image, we call it the source bundle. You setup your build with some additional flags:

$ shp build create my-build [...] --source-bundle-image my-registry/some-image --source-bundle-prune Never|AfterPull --source-credentials-secret registry-credentials

And then you run it in the same way as with the streaming approach:

$ cd directory-with-my-sources

$ shp build upload my-build

The CLI will package the sources and upload it to the container registry. The BuildRun will download them from there. The --source-bundle-prune argument enables you to specify whether sources should be kept, or deleted after the BuildRun pulled them.

Why do we need two approaches? And which one should you use? Here are some criteria to decide:

You want to use local sources without much configuration? Use the streaming approach.

Your Kubernetes cluster does not permit you to perform a kubectl exec operation? Use the bundle approach.

You want to keep the sources of your build to later access it? Use the bundle approach. The BuildRun status captures the digest of the source bundle image that was pulled:

kind: BuildRun

status:

sources:

- name: default

bundle:

digest: sha256:ecba65abd0f49ed60b1ed40b7fca8c25e34949429ab3c6c963655e16ba324170

You can read more about this in our CLI documentation.

And that’s not all, we have some smaller items that are worth to explore:

platform-api-version parameter that allows to configure the CNB_PLATFORM_API version which is relevant to use features of newer Buildpacks implementationsshp version command to easily figure out which version of the command line interface is installed.Install Tekton v0.34.1

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.34.1/release.yaml

Install v0.9.0 using the release YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.9.0/release.yaml

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.9.0/sample-strategies.yaml

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.9.0/cli_0.9.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp version

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.9.0/cli_0.9.0_macOS_x86_64.tar.gz | tar -xzf - -C /usr/local/bin shp

shp version

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.9.0/cli_0.9.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp version

shp help

To deploy and manage Shipwright Builds in your cluster, first make sure the operator v0.9.0 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

Some really cool features are under development these days:

You want to hear from us? Join us a cdCon in June in Austin where we speak about our project.

So, you have heard great things about Shipwright last year and you are ready for more? We are starting the year with our v0.8.0, and here is a list of the most relevant things you should know.

As promised in the v0.7.0 blog post, we closed last year developing three interesting features.

We introduced an extension to the parameter feature, by allowing users to define parameters in the form of a list. A list can be composed of values from secrets, configmaps or plain values.

Our main driver was the support for ARGS in Dockerfiles. This allows users to further customize their builds, by specifying variables that are available to the RUN command.

In addition, being able to use primitive resources (such as secrets and configmaps) to store key-values, allows users to protect confidential data or to share data when defining parameters values in their Builds or BuildRuns.

Note: For more details on this, please see the docs.

Surfacing errors from different containers can be a challenging task, not because of technicality, but rather the question of the best way to represent the state. In case of failure or success during execution, we surface the state under the .status subresource of a BuildRun.

apiVersion: shipwright.io/v1alpha1

kind: BuildRun

# [...]

status:

# [...]

failureDetails:

location:

container: step-source-default

pod: baran-build-buildrun-gzmv5-b7wbf-pod-bbpqr

message: The source repository does not exist, or you have insufficient permission

to access it.

reason: GitRemotePrivate

In this release we concentrated on improving the state of errors that occur during the cloning of git repositories, by introducing .status.failureDetails field. This provides further details on why step-source-default failed.

In addition, this feature enables Build Strategy Authors to signalize what to surface under .status.failureDetails.reason and .status.failureDetails.message, in case a container terminates with a non-zero exit code. We will be gradually adopting this capability in our strategies, at the moment it is only used in the Buildkit strategy.

Now you do not need to worry if you have git misconfigurations in your Builds, we got you covered!

Note: For more details, please see the docs.

At Shipwright, we’ve spent a lot of time trying to figure out the best ways to simplify the experience when building container images. In this release we are introducing a new feature that dramatically improves it, we call it Local Source Upload .

This feature allows users to build container images from their local source code, improving the developer experience and moving them closer to the inner dev loop (single developer workflow).

$ shp build upload -h

Creates a new BuildRun instance and instructs the Build Controller to wait for the data streamed,

instead of executing "git clone". Therefore, you can employ Shipwright Builds from a local repository

clone.

The upload skips the ".git" directory completely, and it follows the ".gitignore" directives, when

the file is found at the root of the directory uploaded.

$ shp buildrun upload <build-name>

$ shp buildrun upload <build-name> /path/to/repository

Usage:

shp build upload <build-name> [path/to/source|.] [flags]

Go ahead and give it a try! The feature is now available in the v0.8.0 cli, look for shp build upload!

Install Tekton v0.30.1

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.30.1/release.yaml

Install v0.8.0 using the release YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.8.0/release.yaml

(Optionally) Install the sample build strategies using the YAML manifest:

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.8.0/sample-strategies.yaml

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.8.0/cli_0.8.0_windows_x86_64.tar.gz | tar xzf - shp.exe

shp help

curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.8.0/cli_0.8.0_macOS_x86_64.tar.gz | tar -xzf - -C /usr/local/bin shp

shp help

curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.8.0/cli_0.8.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

shp help

To deploy and manage Shipwright Builds in your cluster, first make sure the operator v0.8.0 is installed and running on your cluster. You can follow the instructions on OperatorHub.

Next, create the following:

---

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-operator

spec:

targetNamespace: shipwright-build

Ready for Christmas? We are, and our v0.7.0 release just made it!

Is it a big thing just like our previous v0.6.0 release? No, but we have still done a couple of nice things:

We decided to go away from Quay as container registry for the images that we produce, and instead to consolidate things in GitHub. The new images can therefore be found in the Packages section of our repository. No worry, nothing that you need to bother so much. You can continue to install Shipwright through the Kubernetes manifest and that has the right image location in it.

What else has changed with our images? We now sign them with cosign! You can verify our controller image with the following command:

COSIGN_EXPERIMENTAL=1 cosign verify ghcr.io/shipwright-io/build/shipwright-build-controller:v0.7.0

Verification for ghcr.io/shipwright-io/build/shipwright-build-controller:v0.7.0 --

The following checks were performed on each of these signatures:

- The cosign claims were validated

- Existence of the claims in the transparency log was verified offline

- Any certificates were verified against the Fulcio roots.

[{"critical":{"identity":{"docker-reference":"ghcr.io/shipwright-io/build/shipwright-build-controller"},"image":{"docker-manifest-digest":"sha256:887b76092d0e6f3c4f4c7b781589f41fde1c967ae9ae62f3a6bdbb18251a562f"},"type":"cosign container image signature"}...

Our signing process takes advantage of the new keyless mode for cosign and support for OIDC tokens in GitHub actions.

buildrun delete CommandIn v0.7.0 we fixed a bug that prevented the shp buildrun delete command from being exposed to users.

Thanks to new static analysis tooling, we were able to catch this bug and make this command available.

This will be useful for clusters that rebuild their applications regularly with Shipwright, as pruning old BuildRun objects is essential to keeping your cluster healthy.

We have completely overhauled the operator for those who use Operator Lifecycle Manager (OLM) to install Shipwright.

The operator now comes with its own Custom Resource - ShipwrightBuild - that allows administrators to control where the Shipwright build controller is installed.

It also has the ability to automatically install Tekton Pipelines if Tekton’s operator is present in one of OLM’s catalogs.

So far, everything was mostly internal. No new features for our users at all? Of course not. :-) But smaller this time:

GIT_ENABLE_REWRITE_RULE. This is false by default, but you can set it to true in the deployment. It addresses a problem that many of our users faced downstream when using Shipwright in IBM Cloud Code Engine: to access private code repositories, they managed to get their SSH key into the system and referenced it in the Build. But, they failed to specify the SSH URL but instead used the HTTPS one. The magic flag advises our Git step to setup an insteadOf configuration so that the HTTPS URL is internally rewritten to the SSH URL automatically when applicable. The same setting also helps if you have submodules configured using HTTPS in your .gitmodules file, but need to pull them with authentication.emptyDir volume is implicitly created if the strategy uses volume mounts in the build stpes.

This implicit behavior is now deprecated, and will be replaced with explicit support for volumes in an upcoming release.Nothing has changed on the installation. Assuming your Kubernetes cluster is ready, it’s all done with just these three commands, which install Tekton, Shipwright, and the sample build strategies.

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.30.0/release.yaml

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.7.0/release.yaml

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.7.0/sample-strategies.yaml

For the CLI, we still have no package manager support. :-( You therefore need to get the release again from GitHub:

$ curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.7.0/cli_0.7.0_windows_x86_64.tar.gz | tar xzf - shp.exe

$ shp help

$ curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.7.0/cli_0.7.0_macOS_x86_64.tar.gz | tar -xzf - -C /usr/local/bin shp

$ shp help

$ curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.7.0/cli_0.7.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

$ shp help

Coming soon! We are in the process of adding the new version of the Shipwright operator to OperatorHub. Once the new version has been added, you can follow the provided instructions on OperatorHub to install the operator with OLM. If you previously installed v0.1.0 of the Shipwright operator, you must remove it first.

Once the operator has been installed, you will be able to deploy the Build controller and APIs by creating an instance of the ShipwrightBuild custom resource:

apiVersion: operator.shipwright.io/v1alpha1

kind: ShipwrightBuild

metadata:

name: shipwright-build

spec:

targetNamespace: shipwright-build

We have cool stuff being under development. So, let me list four items that are all cool and are in the makings.

Soon you should be able to see an evolution of parameters: build strategy authors will be able to define array parameters which is required for scenarios such as build-args for Dockerfile-based builds, and Build users will be able to define that the value should be retrieved from a ConfigMap or Secret.

We work on the next evolution of error reporting inside BuildRuns. Where you today just see a BuildRun being failed and must look at the Pod logs to determine the root cause, you will see further error reasons and messages in the BuildRun status directly – with those details provided internally in our Shipwright-managed steps (like the Git step, for example when a revision does not exist), but also by the build strategy author (for example if Buildpacks fail to detect any source code, or a Dockerfile-based strategy when the Dockerfile does not exist).

We are also looking to add support for volumes (such as PersistenVolumeClaims) in future releases.

Our first pass will help build strategy authors add volumes in their build steps, enabling capabilities such as artifact and image layer caching.

Finally, we investigated and spiked on different approaches to support building from source code from your local disk rather than from a Git repository. Part of the code is even in the product already, but we need more time to get it done end-to-end.

Stay tuned!

It was a long journey, longer than it should have been, but we have finally reached our goal! Shipwright Build v0.6.0, our first release after joining the Continuous Delivery Foundation.

The feature list is impressive. It was hard to pick a “Top 3”, but here are my choices:

Our API objects; the build strategy that defines the steps to turn source code into a container image, the build where a user configures their container image build, and the build run as an instance of a build; were missing a major capability: parameterization. We defined the available inputs that a user specified in a build. Those were only the minimum: the location of the source code, the name of the strategy and the destination image. Any other customization of the build was not possible, limiting the possible scenarios.

Two major enhancements bring us a huge step forward:

Build strategy authors can now define parameters that they can use then in their steps. Build users provide the values for those parameters. Our sample build strategies start to make use of this feature: the BuildKit sample now contains a parameter to enable the usage of insecure container registry, and the ko sample comes with a rich set of parameters to select Go version and flags, ko version and the main package directory. The ko sample build outlines how those parameters can be used to build the Shipwright Build controller image. Read more about parameters in the documentation for build strategy authors and build users.

It is not always possible to define all of the parameters upfront in a build strategy. For those tools that control behavior through environment variables, users can now define environment variables in their builds and build runs. Environment variable values can be referenced from Kubernetes secrets to support scenarios where for example tokens for private dependency management systems are needed. Examples of builds and build runs with environment variables can be found in our build and build run documentation.

Being based on Kubernetes, all our objects are Kubernetes custom resources with their ecosystem-specific syntax and concepts. But, our users should not be required to be experts in using Kubernetes. We are therefore proud to release the first version of our CLI. Build users can use it to easily manage their builds and build runs. Some highlights:

Assuming you are connected to your Kubernetes cluster and have the credentials for your container registry in place, then creating a build for our go-sample is a single command:

$ shp build create sample-go --source-url https://github.com/shipwright-io/sample-go --source-context-dir source-build --output-image <CONTAINER_REGISTRY_LOCATION>/sample-go --output-credentials-secret <CONTAINER_REGISTRY_SECRET>

Created build "sample-go"

And then it is just another single command to trigger a build run and and to follow its logs. Nice. 😊

$ shp build run sample-go --follow

Pod 'sample-go-9hbpj-l9t5j-pod-fz2sp' is in state "Pending"...

Pod 'sample-go-9hbpj-l9t5j-pod-fz2sp' is in state "Pending"...

[source-default] 2021/10/08 11:43:33 Info: ssh (/usr/bin/ssh): OpenSSH_8.0p1, OpenSSL 1.1.1g FIPS 21 Apr 2020

[source-default] 2021/10/08 11:43:33 Info: git (/usr/bin/git): git version 2.27.0

[source-default] 2021/10/08 11:43:33 Info: git-lfs (/usr/bin/git-lfs): git-lfs/2.11.0 (GitHub; linux arm64; go 1.14.4)

[source-default] 2021/10/08 11:43:33 /usr/bin/git clone -h

[source-default] 2021/10/08 11:43:33 /usr/bin/git clone --quiet --no-tags --single-branch --depth 1 -- https://github.com/shipwright-io/sample-go /workspace/source

...

So far so good. Please keep in mind that this is our first CLI release. We know that some of the new backend features are not yet available through it, and the command layout will likely go through the subsequent iterations until we are all happy with the usability and consistency.

So far, the images that were produced in the strategy’s tools were untouched. Now we started going beyond this:

A community-provided extension for the Kaniko build strategy sample provides image scanning using the Trivi tool. This is an example of how a build strategy author can provide advanced capabilities. Read about scanning with Trivy and check out how the build strategy has been built.

Another feature enables first-class support for labels and annotations provided by build users. Those are added to the image after the build strategy steps are done. Read more in Defining the output of a build.

–

A lot of nice stuff, but is that all ? No. 😊 Some other small but still nice features:

For a full list of updates you can check out the release change log.

Now, how to get started? Assuming your Kubernetes cluster is ready, it’s all done with just these three commands, which install Tekton, Shipwright, and the sample build strategies.

kubectl apply -f https://storage.googleapis.com/tekton-releases/pipeline/previous/v0.25.0/release.yaml

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.6.0/release.yaml

kubectl apply -f https://github.com/shipwright-io/build/releases/download/v0.6.0/sample-strategies.yaml

Further steps to get your first build running are available in our Try It! section.

For our CLI, our first release is only published to GitHub. We aim to support package managers with future releases. Until then, here are the commands to install the CLI:

$ curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.6.0/cli_0.6.0_windows_x86_64.tar.gz | tar xzf - shp.exe

$ shp help

$ curl --silent --fail --location https://github.com/shipwright-io/cli/releases/download/v0.6.0/cli_0.6.0_macOS_x86_64.tar.gz | tar -xzf - -C /usr/local/bin shp

$ shp help

$ curl --silent --fail --location "https://github.com/shipwright-io/cli/releases/download/v0.6.0/cli_0.6.0_linux_$(uname -m | sed 's/aarch64/arm64/').tar.gz" | sudo tar -xzf - -C /usr/bin shp

$ shp help

To get in touch with us, join the Kubernetes slack, and meet us in the #shipwright channel.

We are pleased to announce that Shipwright is officially an Incubating Project under the Continous Delivery Foundation!

The Continuous Delivery Foundation (CDF) seeks to improve the world’s capacity to deliver software with security and speed. Shipwright furthers this mission by making it easy to build cloud native applications on cloud native infrastructure. The CDF is the upstream home for many other open source projects used to deliver software, such as Jenkins, Spinnaker, and Tekton. Together, we look forward to empowering develpers and enabling teams to build, test, and deploy their applications with confidence.

Shipwright was featured prominently at cdCon 2021 - the CDF’s annual developer conference. The event was virutal, and all of the presentations are available on the CDF’s YouTube channel. Be sure to check out the Shipwright sessions:

Gatekeeper is a customizable admission webhook for Kubernetes, which allows you to configure policy over what resources can be created in the cluster. In particular, we can use Gatekeeper to add policy to Shipwright Builds. In this example, you can see how you can use a policy to control what source repositories Shipwright is allowed to build, so that you can have more control over what code executes inside your cluster.

Unless you are able to build images without root access in the build-process, there are risks to building arbitrary Open Container Initiative (OCI) images within your cluster. Because of these risks, an organization may want to limit builds to trusted sources, such as specific github organizations or an internally hosted git server. This is an example of how that can be configured using Gatekeeper.

If you do not have Gatekeeper installed already, see install instructions here. These examples were tested with version 3.4.

At the time of writing 3.5 has an open issue with parameters, but there is an open PR to fix it.

Build resourcesEither Create or append to your gatekeeper-system config. This configmap specifies all resources that Gatekeeper will monitor.

apiVersion: config.gatekeeper.sh/v1alpha1

kind: Config

metadata:

name: config

namespace: "gatekeeper-system"

spec:

sync:

syncOnly:

- group: "shipwright.io"

version: "v1alpha1"

kind: "Build"

This constraint template will let us create our ShipwrightAllowlist; in the parameters to the ShipwrightAllowlist we will specify the allowed sources, and this constraint template will apply the logic.

This constraint template is based on this example in the Gatekeeper docs.

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: shipwrightallowlist

spec:

crd:

spec:

names:

kind: ShipwrightAllowlist

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package shipwrightallowlist

violation[{"msg": msg}] {

input.review.object.kind == "Build"

repo_url := input.review.object.spec.source.url

# Remove the protocol from the url

repo = strings.replace_n({

"https://": "",

"http://": "",

"git://": "",

"ssh://": "",

}, repo_url)

# is the repo in the allowlist?

allowlist := [

good | source = input.parameters.allowedsources[_];

good = startswith(repo, source)

]

not any(allowlist)

msg := sprintf("The Build repo has not been pre-approved: %v. Allowed sources are: %v", [repo, input.parameters.allowedsources])

}

The ConstraintTemplate we created above creates a new Custom Resource Definition (CRD) called ShipwrightAllowlist. With this CRD created, we can define a list of allowed sources to build from!

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: ShipwrightAllowlist

metadata:

name: shipwrightallowlist

spec:

match:

kinds:

- apiGroups: ["shipwright.io"]

kinds: ["Build"]

parameters:

# Remember to terminate the sources with a `/` at the end.

# Don't include the protocol. I.e.

# GOOD: "github.com/shipwright-io/"

# BAD: "https://github.com/shipwright-io"

allowedsources:

- "github.com/shipwright-io/"

This Build below will be created, as is under the github.com/shipwright-io/ organization, so it is allowed by our policy.

# sample-go-build.yaml

apiVersion: shipwright.io/v1alpha1

kind: Build

metadata:

name: buildah-golang-build

spec:

source:

# We trust github.com/shipwright-io/

url: https://github.com/shipwright-io/sample-go

contextDir: docker-build

strategy:

name: buildah

kind: ClusterBuildStrategy

dockerfile: Dockerfile

output:

image: image-registry.openshift-image-registry.svc:5000/build-examples/taxi-app

Create it

$ kubectl apply -f sample-go-build.yaml

build.shipwright.io/buildah-golang-build created

However the build below will not be created, as it belongs to github.com/docker-library/

# hello-world-build.yaml

apiVersion: shipwright.io/v1alpha1

kind: Build

metadata:

name: kaniko-hello-world-build

annotations:

build.shipwright.io/build-run-deletion: "true"

spec:

source:

# Not an approved source!!!

url: https://github.com/docker-library/hello-world

contextDir: .

strategy:

name: kaniko

kind: ClusterBuildStrategy

dockerfile: Dockerfile.build

output:

image: image-registry.openshift-image-registry.svc:5000/build-examples/hello-world

Attempting to create this will yield an error.

$ kubectl apply -f hello-world-build.yaml

Error from server ([shipwrightallowlist] The Build repo has not been pre-approved: github.com/docker-library/hello-world. Allowed sources are: ["github.com/shipwright-io/"]): error when creating "hello-world-build.yaml": admission webhook "validation.gatekeeper.sh" denied the request: [shipwrightallowlist] The Build repo has not been pre-approved: github.com/docker-library/hello-world. Allowed sources are: ["github.com/shipwright-io/"]

With just a few yaml files, we can add layers of protection to the cluster. You can checkout Gatekeeper to learn more about the Kubernetes admission webhook, and also checkout Open Policy Agent which powers Gatekeeper and provides the policy language.

What is Shipwright? Which problems does this project try to solve?

In Part 1 of this series, we looked back at the history of delivering software applications, and how that has changed in the age of Kubernetes and cloud-native development.

In this post, we’ll introduce Shipwright and the Build APIs that make it simple to build container images on Kubernetes.

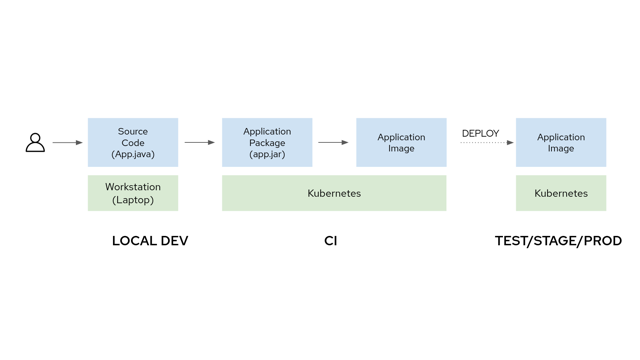

Recall in Part 1, developers who moved from VM-based deployments to Kubernetes needed to shift their unit of delivery from binaries to container images. Teams adopted various tools to accomplish this task, ranging from Docker/Moby to Cloud-Native Buildpacks. New cloud-native applications like Jenkins-X and Tekton have emerged to deliver these container images on Kubernetes. Jenkins - the predecessor to Jenkins-X - has continued to play a prominent role in automating the building and testing of applications.

Building a container image on Kubernetes is not a simple feat. There are a wide variety of tools available, some which require knowledge on how to write a Dockerfile, others which do not or are tailored for specific programming languages. For CI/CD platforms like Tekton, setting up a build pipeline requires extensive configuration and customization. These are burdens that are difficult to overcome for many development teams.

With Shipwright, we want to create an open and flexible framework that allows teams to easily build container images on Kubernetes. Much of the work has been inspired by OpenShift/okd, which provides its own API for building images. At the heart of every build are the following core components:

Developers who use docker are familiar with this process:

$ docker build -t registry.mycompany.com/myorg/myapp:latest .

$ docker push registry.mycompany.com/myorg/myapp:latest

The Build API consists of four core Custom Resource Definitions (CRDs):

BuildStrategy and ClusterBuildStrategy - defines how to build an application for an image

building tool.Build - defines what to build, and where the application should be delivered.BuildRun - invokes the build, telling the Kubernetes cluster when to build your application.BuildStrategy and ClusterBuildStrategy are related APIs to define how a given tool should be

used to assemble an application. They are distinguished by their scope - BuildStrategy objects

are namespace scoped, whereas ClusterBuildStrategy objects are cluster scoped.

The spec consists of a buildSteps object, which look and feel like Kubernetes Container

specifications. Below is an example spec for Kaniko, which can build an image from a

Dockerfile within a container:

spec:

buildSteps:

- name: build-and-push

image: gcr.io/kaniko-project/executor:v1.3.0

workingDir: /workspace/source

securityContext:

runAsUser: 0

capabilities:

add:

- CHOWN

- DAC_OVERRIDE

- FOWNER

- SETGID

- SETUID

- SETFCAP

env:

- name: DOCKER_CONFIG

value: /tekton/home/.docker

command:

- /kaniko/executor

args:

- --skip-tls-verify=true

- --dockerfile=$(build.dockerfile)

- --context=/workspace/source/$(build.source.contextDir)

- --destination=$(build.output.image)

- --oci-layout-path=/workspace/output/image

- --snapshotMode=redo

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 250m

memory: 65Mi

The Build object provides a playbook on how to assemble your specific application. The simplest

build consists of a git source, a build strategy, and an output image:

apiVersion: build.dev/v1alpha1

kind: Build

metadata:

name: kaniko-golang-build

annotations:

build.build.dev/build-run-deletion: "true"

spec:

source:

url: https://github.com/sbose78/taxi

strategy:

name: kaniko

kind: ClusterBuildStrategy

output:

image: registry.mycompany.com/my-org/taxi-app:latest

Builds can be extended to push to private registries, use a different Dockerfile, and more. Read the docs for a deeper dive on what is possible.

Each BuildRun object invokes a build on your cluster. You can think of these as a Kubernetes

Jobs or Tekton TaskRuns - they represent a workload on your cluster, ultimately resulting in a

running Pod.

A BuildRun invocation can be very simple and concise:

apiVersion: build.dev/v1alpha1

kind: BuildRun

metadata:

name: kaniko-golang-buildrun

spec:

buildRef:

name: kaniko-golang-build

As the build runs, the status of the BuildRun object is updated with the current progress. Work

is in progress to standardize this status reporting with status conditions.

You may have noticed that the Kaniko build strategy above references two directories in the build step:

/tekton/home/workspaceWhere do these directories come from? Shipwright is built on top of Tekton,

taking advantage of its features to simpify CI/CD workloads. Among the things that Tekton provides

is a Linux user $HOME directory (/tekton/home) and a workspace where the application can be

assembled (/workspace).

Shipwright can be installed in one of two ways:

If your cluster has Operator Lifecycle Manager installed, option 2 is the recommened approach. OLM will take care of installing Tekton as well as Shipwright.

The samples contain build strategies for container-based image build tools like Kaniko, Buildah, Source-to-Image and Cloud-Native Buildpacks.

Update 2020-11-30: Added link to Part 2 of this series

What is Shipwright? Which problems does this project try to solve?

In Part 1 of this series, we’ll look back at the history of delivering software applications, and how that has changed in the age of Kubernetes and cloud-native development.

In Part 2 of this series, we’ll introduce Shipwright and the Build APIs that make it simple to build container images on Kubernetes.

Think back to 2010. If you were a professional software engineer, a hobbyist, or a student, which tools and programming languages did you use? How did you package your applications? How did you or your team release software for end-users to consume?

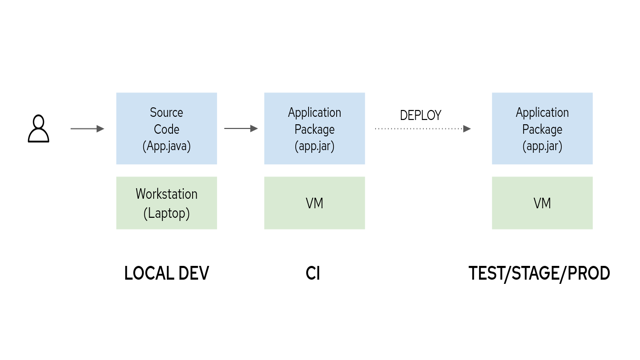

Back then, I was a junior software engineer at a small technology consulting company. My team maintained a suite of custom software tools whose back-end was written in Java. Our release process consisted of creating a tag in our version control system (SVN), compiling a JAR file on our laptops, and uploading the JAR to our client’s SFTP site. After submitting a ticket and undergoing a change control review with our client’s IT department, our software would be released during a scheduled maintenance window.

For engineers in larger enterprises, this experience should feel familiar. You may have used C#, C++, or were adventurous and testing Ruby on Rails. Perhaps instead of compiling the application yourself, a separate release team was responsible for building the application on secured infrastructure. Your releases may have undergone extensive acceptance testing in a staging environment before being promoted to production (and those practices may still continue today). If you were fortunate, some of the release tasks were automated by emerging continuous integration tools like Hudson and Jenkins.

The emergence of Docker/Moby and Kubernetes changed the unit of delivery. With both of these platforms, developers package their software in container images rather than executables, JAR files, or script bundles. Moving to this method of delivery was not a simple task, since many teams had to learn entirely new sets of skills to deploy their code.